With almost 70% of the world’s population on the Internet, it has never been more critical for companies to handle massive streams of data updating in real-time. In data streaming, we all know the difference a few extra seconds can make. So do our customers.

As the online economy grows more competitive, you need tools to make your streaming data processes more efficient and user-friendly. That’s why we built Kafka Connectors Board (KCB), an open-source Kafka library that lets you manage and monitor connectors in your Kafka clusters. If that was a lot, no worries. By the end of this article, you’ll be an expert in KCB library — and Tranglo’s digital transformation.

The importance of big data

Big data refers to data too large to process and analyse with traditional software. Companies that use data analytics make decisions 5x faster than the ones that do not. In a fast-moving marketplace, that extra speed and accuracy can be the difference between success and failure.

At Tranglo, our digital transformation takes advantage of big data analysis to produce business insights and intelligence that help business partners make better decisions. But any reliable big data ecosystem needs a strong foundation — data engineering.

Understanding data engineering

Data engineering describes any software approach to handling information systems. At Tranglo, it refers to the process of designing and building data pipelines.

A data pipeline is any automated software that facilitates the flow of data from one location to another. A lot can go wrong during the data flow process, so getting the pipeline right is crucial. A well-developed pipeline can protect against data corruption, bottlenecks, or data conflicts and duplicates.

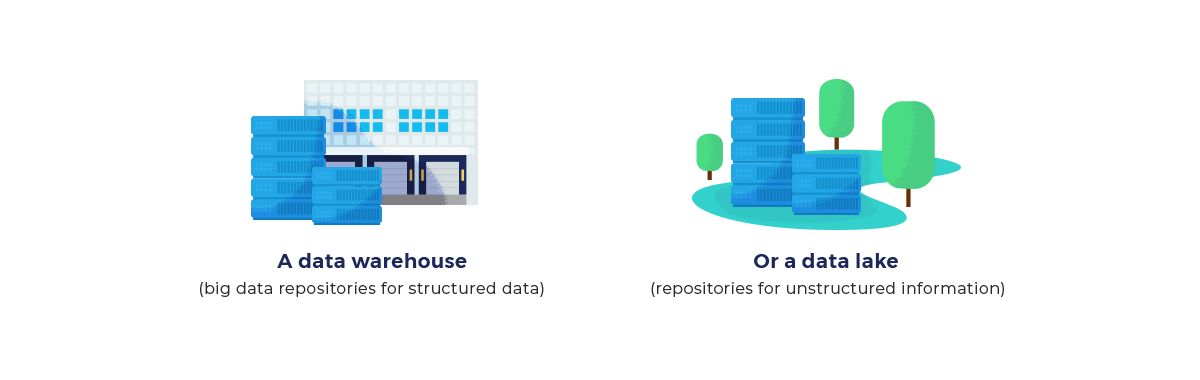

Data pipelines move (and sometimes process) data on its way to:

To implement Tranglo’s data warehouse plan, we needed a robust and scalable ETL and data streaming platform (more on streaming data soon). Any such platform serves as the foundation for a powerful data engineering infrastructure.

A reliable data streaming platform helps Tranglo transport data from the current transactional databases to new locations where we can pull further insights from it:

Streaming data takes focus here, but what is it exactly?

What is streaming data?

In computation, streaming refers to the non-stop stream of data from any source. These kinds of data streams typically have no discernible start or finish, and can be produced from a wide variety of sources:

- User activity

- Server log files

- Sensor inputs

- Networking devices

- Location data

Streaming processing technology builds on top of streaming data — it involves parsing, analysing and acting on streaming data as it is produced. This is different from batch processing, where information is first collected into “batches” and then analysed.

Streaming data is present in several different industries, from finance to retail to consumer transportation. For example, a bank might monitor real-time stock data and rebalance its clients’ investment portfolios based on the appropriate risk level.

What is Kafka?

Tranglo is using a microservices architecture — a system organisation that requires our data platform to handle real-time streaming data. That information needs to be synchronised between disparate sources and destinations and executed in a point-to-point fashion (mesh network). That’s where Kafka comes in.

Kafka was born out of a LinkedIn project to better track the data produced by user behaviour on the site. Eventually, the software became publicly available under the Apache Software Foundation — hence, Apache Kafka.

Apache Kafka is an open-source software framework that can store, read and analyse real-time data streams (it is often called an event streaming platform). Since it is open-source, it’s free for anyone to use, and it’s maintained, updated and improved by a dedicated group of volunteers. It’s also a widely popular tool, used by companies like the New York Times, Pinterest and Ancestry.com.

What makes Apache Kafka interesting is that it is designed to run on a distributed network. By doing this, it can leverage the processing power that comes from living on many different servers (these servers segment into Kafka clusters).

Understanding how Kafka works

Kafka Connect will import and export data streams between your existing systems and the other Kafka clusters running (this might be a relational database, Hadoop, etc.). The Kafka community already offers hundreds of connectors, so you typically don't need to create your connector.

The Apache Kafka Streams API lets you build apps and services that process streams of data. With the Kafka Streams client library, input is read from topics to produce an output for other topics. It also offers tools for data stream processing, like transformations, windowing and time-based processing.

The platform is best used with a schema registry per Kafka cluster to ensure data is read correctly. This is a data contract between where the information is coming from and where it is heading. Schemas and rules are associated with Kafka topics with the schema registry.

What Kafka Connectors Board can help you achieve?

Kafka Connectors Board is an open-source Kafka library. We built this Apache Kafka project to make stream processing easier for fintech companies (and anyone trying to send money around the world!).

With KCB, you can track, manage and filter all the connectors you’ve deployed using Kafka Connect.

You can also view your Kafka connectors by name or sort through your list via status. The connector tasks can also be started, stopped or restarted in bulk. All of this is done via a simple visual interface.

Getting started with the Kafka Connectors Board is incredibly simple. Here’s a quick crash course:

- Install NodeJS. KCB is built with Angular, so you need to import it via the NodeJS package manager. Install the latest version and confirm that you are running NPM.

- Import the Angular command-line interface. Open a new terminal window and run the command to install the Angular CLI.

- Clone the library into your directory of interest. Visit http://localhost:4200/ to access the KCB interface.

Bringing it all together

We can liken our data infrastructure to a tree (the tree itself is built using Kafka).

- Our data pipelines are like roots, collecting data and nutrients from various sources

- Those pipelines aggregate all that data into a single trunk (our data warehouses)

- The tree (our data streaming platform, Kafka) takes those nutrients and sends them to the branches, leaves and fruits (analytics modules, business insights, etc.)

Another way to think about it is to describe the data streaming platform as the central nervous system of a business:

- Separate signals from the senses aggregate in the brain to form thoughts and ideas

- Similarly, Kafka centralises data from separate sources and transports them to actionable locations

Developing this data engineering ecosystem is how we turn the data we generate every day into usable, actionable business intelligence.

Try Tranglo’s Kafka Connectors Board today

The Apache Kafka platform is scalable and efficient. It also enables rapid real-time data stream processing. With KCB, you get more granular insights and control into the exchange of data from the Kafka cluster to the end system. Curious to give it a shot yourself? Experiment with Kafka Connectors Board today!

Frequently asked questions

What is a Kafka library?

Libraries add functionality to Apache Kafka. For example, Kafka Streams API lets you create fault-tolerant and scalable stream processing applications. Kafka Connectors Board enables you to manage the Kafka connectors you’ve already deployed.

What is Kafka used for?

Apache Kafka has many applications: connecting rideshare drivers with riders, updating chain-wide retail inventory, monitoring player-game interactions and more. At its core, Kafka is a high-performance software platform that you can use to analyse, move and process massive amounts of data updating in real time.

Why is Kafka so popular?

Kafka is relatively easy to set up, and it can connect with your organisation’s existing systems. With more business decisions relying on vast amounts of aggregate data, Kafka is an invaluable tool for companies like Netflix, Uber, PayPal and more.